|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

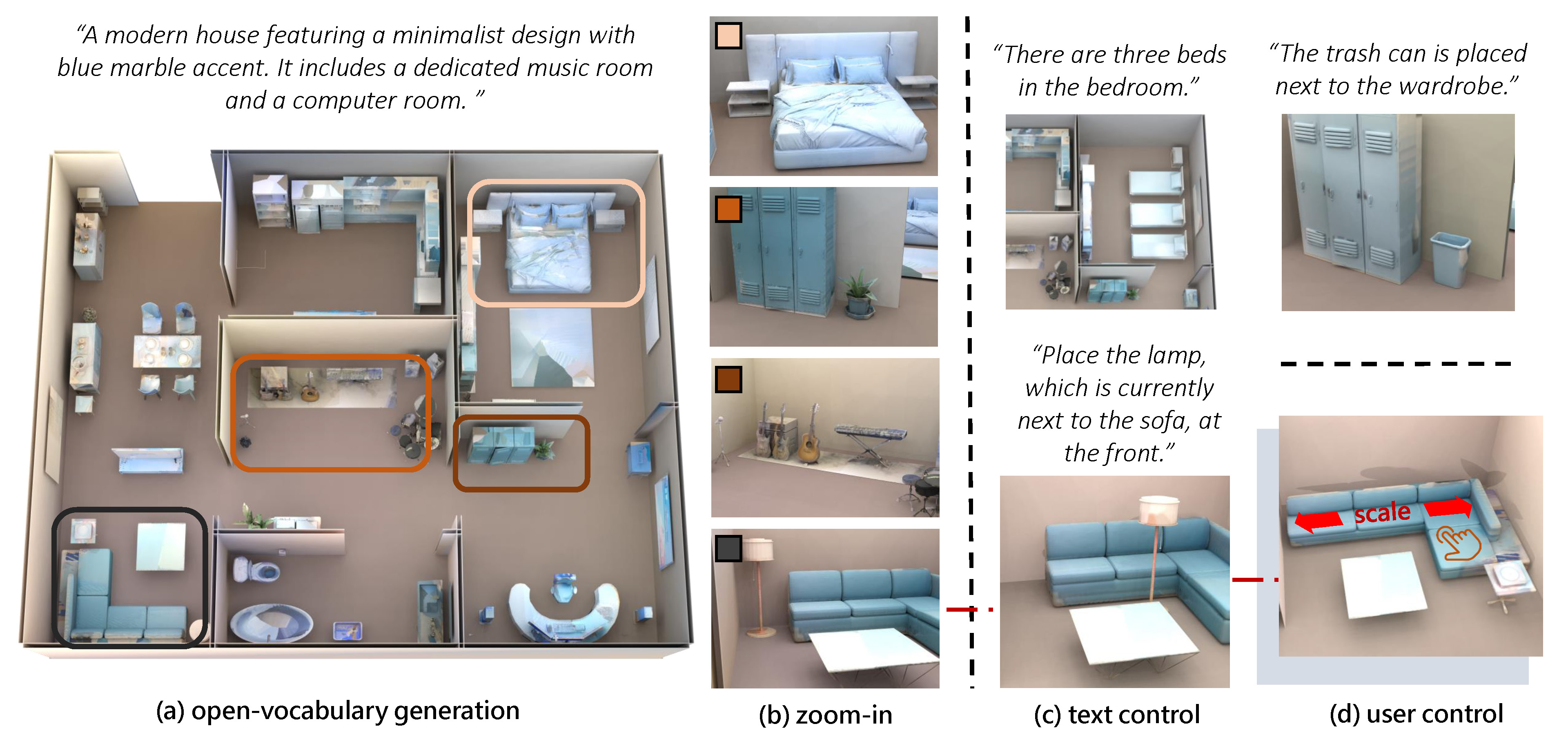

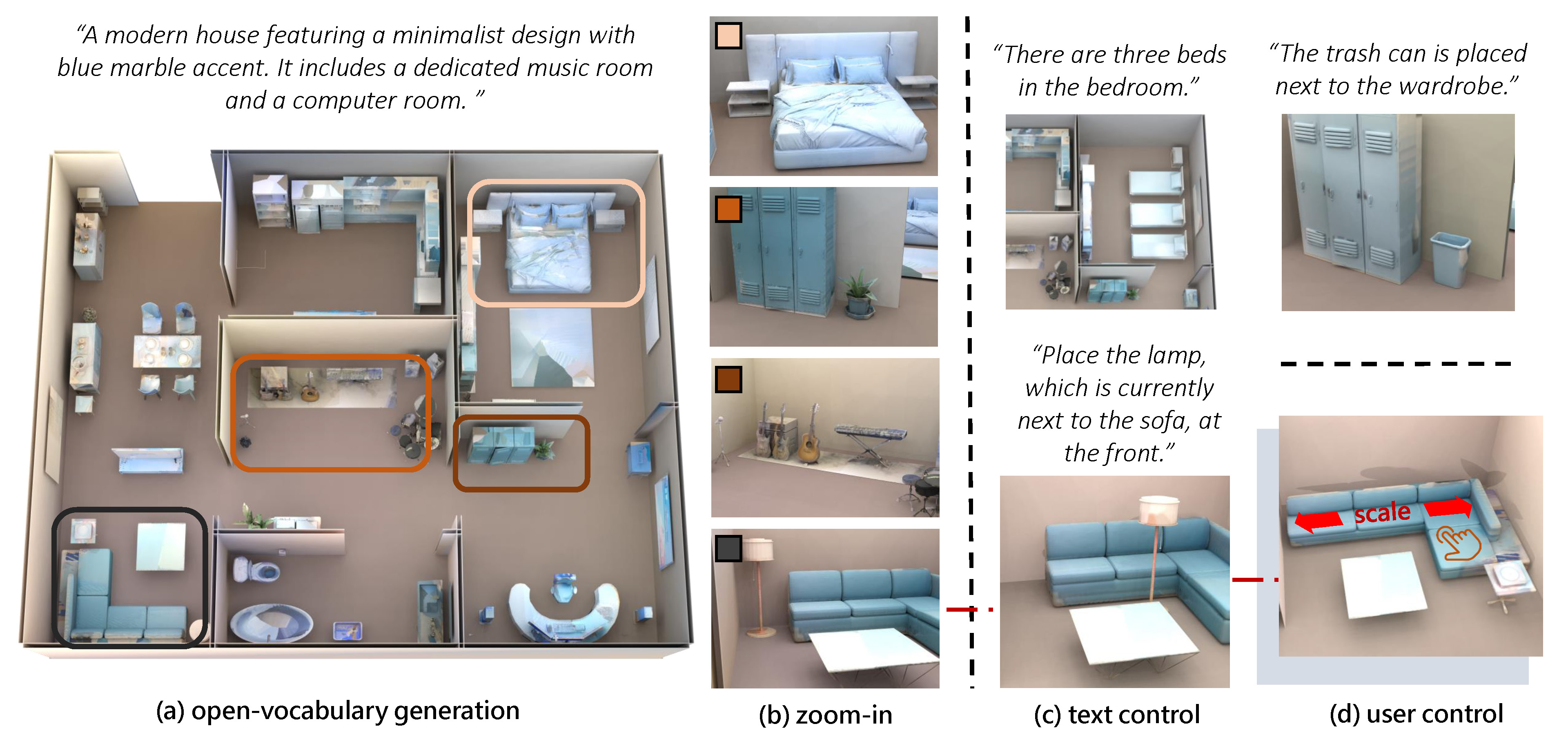

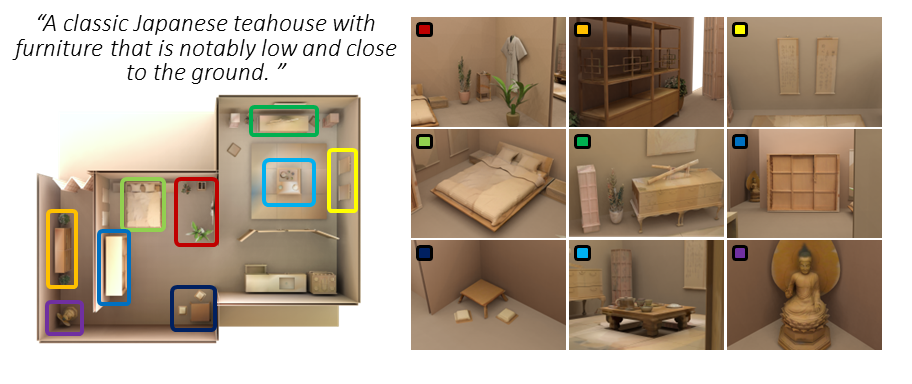

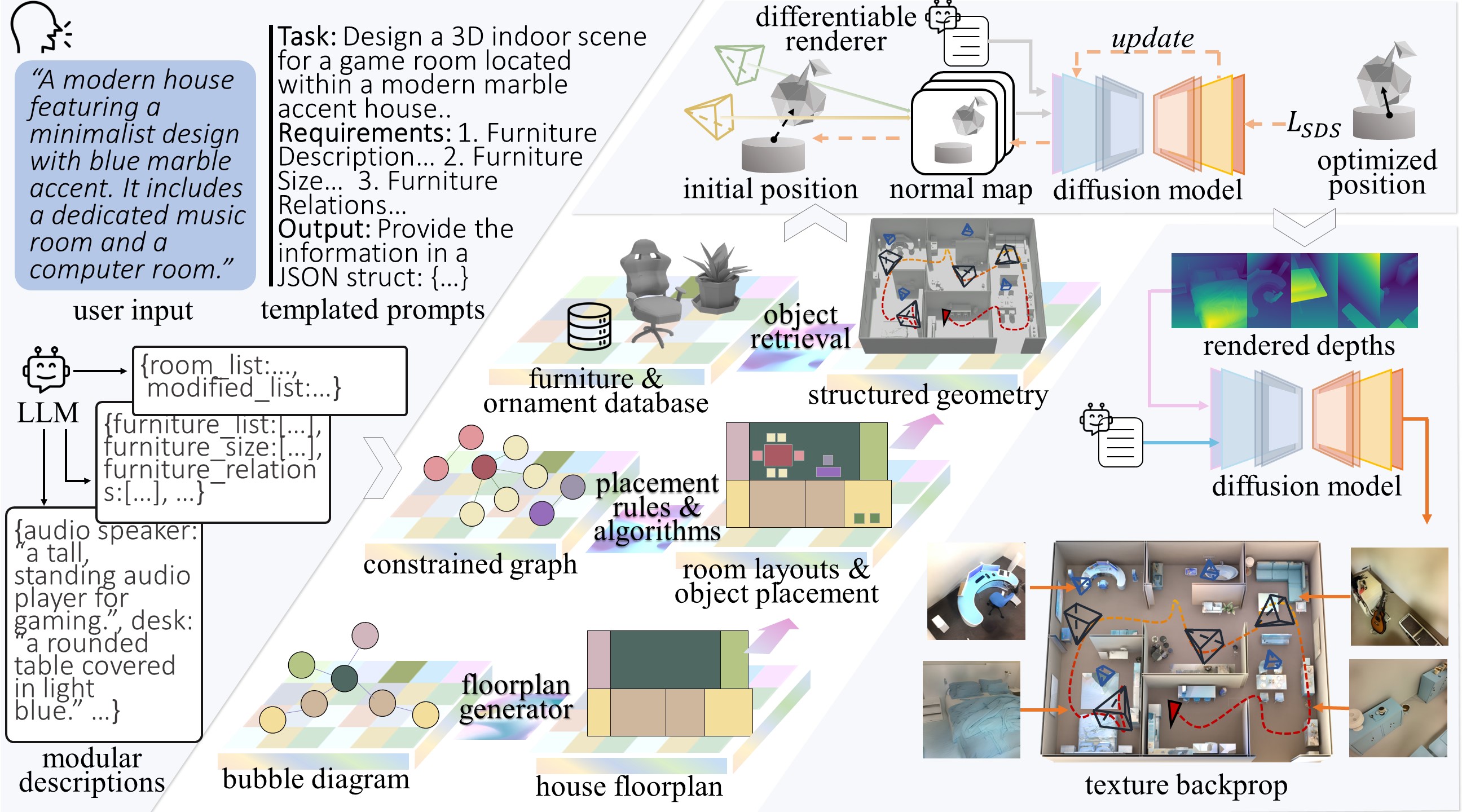

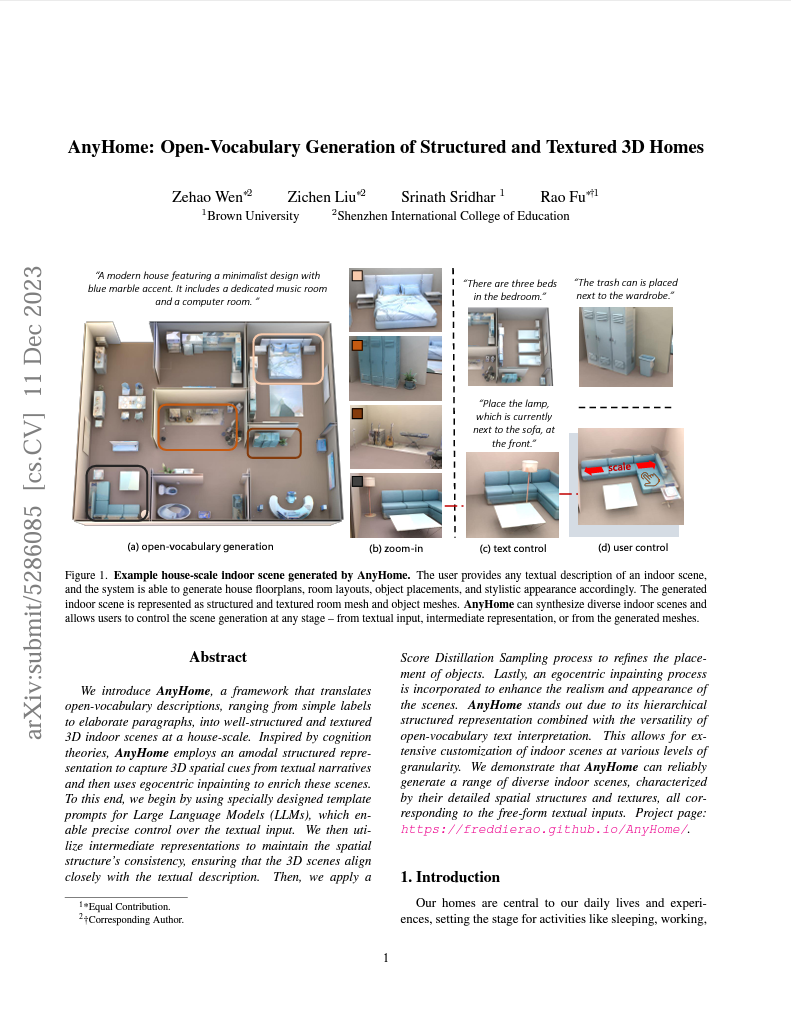

Taking a free-form textual input, our pipeline generates house-scale scenes by: i) comprehending and elaborating user textual input via querying a LLM with modulated prompts; ii) converting textual descriptions into structured geometry using intermediate representations; iii) employing a SDS process with a differentiable renderer to refine object layouts egocentrically; and iv) applying depth-conditioned texture in-painting for texture generation. |

|

For inquiries about creating unique scenes or to request the generated mesh files, kindly reach out to Rao at rao_fu@brown.edu. |

|

R. Fu, Z.H. Wen, Z.C. Liu, S. Sridhar. AnyHome: Open-Vocabulary Generation of Structured and Textured 3D Homes. (hosted on ArXiv) |

|

|